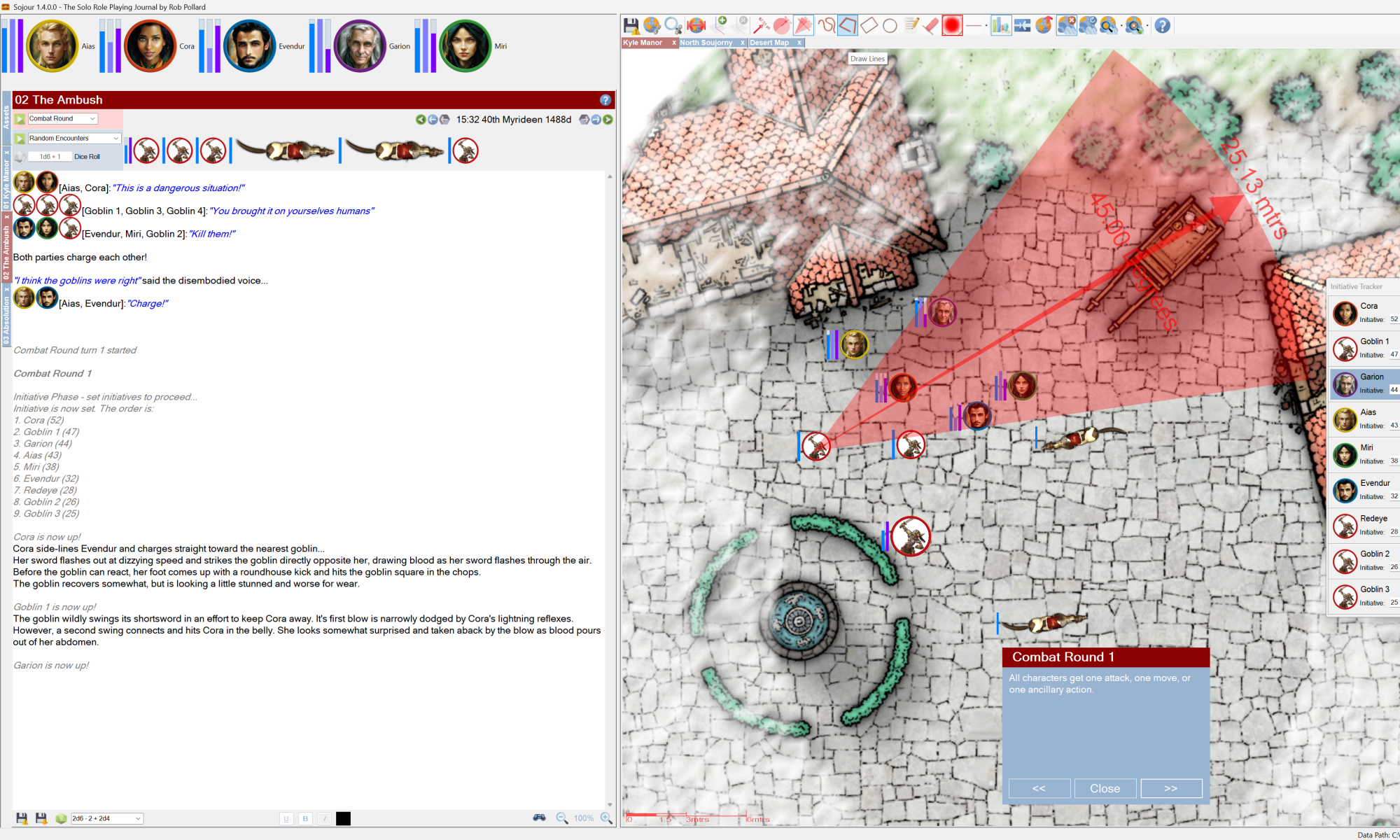

With the Fog of War release out of the door, I thought I’d get some metrics together!

This is a technical post for those that like numbers and might be especially interesting for those that write software.

I should probably preface this post by saying that my views on software development are my own and should not be considered the views of any employers that I have worked for, either past or present.

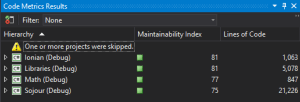

First up, the code itself. How big is it?

Sojour consists of 4 main components, not including the installer. These 4 components have a total line count of 28,214 lines of code! The installer would add another 381 lines of code to that count.

However, these figures don’t tell the whole story as Sojour comes with a 161 page illustrated manual and various art assets that also have to be maintained.

Not all of this code was written from scratch. Quite a lot of it had been borrowed from my other project Ancient Armies.

The maintainability index has a range of 75–81. This index is a broad indication of code quality. Microsoft suggest anything from 20-100 is good, so I guess Sojour’s scores put it at the higher end – which is good news 🙂

How long did it take to write?

That’s a more difficult question due to the fact that when Sojour started I never took it seriously! It was considered a ‘quick’ and ‘dirty’ project reusing Ancient Armies code classes to deliver a VTT that was more suitable for my style of play. It was never originally intended to go on sale!

There was sporadic development between December 2021 and July 2022, but I don’t know exactly how much development took place in that time period as Sojour had no development pipeline setup for it.

It wasn’t until July 2022 that I started to see its potential and take it seriously. At that time the full development pipeline was put in place. This pipeline is fully automated and allows traceability from stories to code and then on to released binaries and vice versa. It provides an internal Wiki which is also linked to stories and it can build any specific version of Sojour at a whim (handy for testing). It’s this environment that’s also responsible for Sojour’s seemingly random version numbers (not all builds are released).

Despite the lack of metrics prior to July 2022, I do have very accurate measurements subsequent to that period. All figures are from that point on and do not consider the sporadic work prior to that.

According to my system a total of 490 hours has been sunk into the project. That’s around 61, 8 hour days in little more than a year! Not bad considering that this was all done in my free time!

How good was I at estimating time for my work?

It turns out I was pretty pants.

I tended to get my work done at approximately 64% of the original time estimate. So I’m over-estimating to a tune of 36% on average!

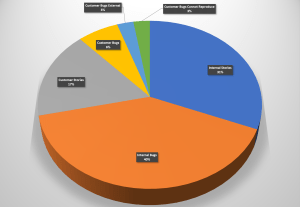

What about story/work break down? What have I actually been up to?

For this I will show the metrics post-release as the pre-release metrics would seriously skew what’s presented, mostly because there were no customers pre-release!

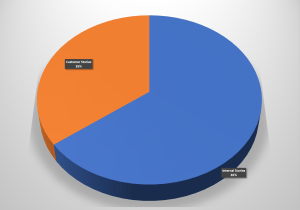

From here we can see that the story breakdown is almost equally divided between 50% bug fixing and 50% new functionality. Note that this chart and the others below are broken down by number of stories and not time. As such they don’t represent effort.

Unlike Ancient Armies, Sojour has no automated tests, at least not yet. The way development works is that a story is tested whilst it is being developed. Then I change my hats and the story goes in for internal testing. It’s at this point that the internal bugs are raised and fixed.

I suspect that the lack of automated tests is down to the fact that Sojour’s design and feature set have been extremely fluid and volatile. If I were to have put in automated tests right at the beginning, those tests would have created a resistance to change that is directly proportional to their numbers – they would have hindered more than helped.

In addition, their inclusion would have pushed the release date right by at least 6 months. Adding full test coverage to any software project tends to multiply its codebase’s size anywhere from 200% to 400%, sometimes more!

The inclusion of automated tests is on the cards. But the time invested in those will take me away from time required to implement the new features that the customers want. I will need a strong business case to switch feature time out for automated test time.

For that to happen, I would need some consistently problematic areas to appear within Sojour – but thus far, they have failed to materialise.

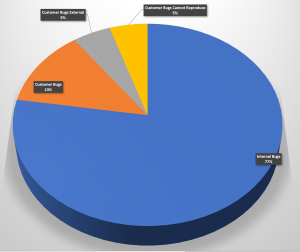

Lets take a closer look at the bugs:

Before we start, I should probably air my views with regard to bugs and specifically how they relate to Sojour’s development.

Controversially, I consider bugs a natural and intrinsic part of the software development cycle. I view them as a form of iterative feedback. Addressing them is simply addressing that feedback and iterating on an initial code design and implementation.

One could debate that automated tests could reduce the number of internal bugs, but I could debate that for Sojour, manual testing, raising the bugs and fixing them is a faster and a more flexible iterative process than writing and maintaining rigid tests. This is especially true when the majority of the detailed features of Sojour’s stories tend to be discovered during the manual testing phase – after all, the best way to test the flow of a system is to actually use it!

With that out of the way, we can concentrate on the chart’s results 🙂

The results indicate that the internal testing effort has been reasonably effective. 23% of all internal bugs made it to the outside world, of which 43% of those are either unreproducible (normally singular reports) or the result of external factors beyond Sojour’s control (For example Microsoft releasing a broken operating system component – those bugs tend to need quick workarounds placed within Sojour).

On the plus side, that means that 77% of bugs never make it out of the door! That’s not a bad figure, but ultimately it’s one that I aim to improve upon.

What about customer requests for new features? Are any being actioned?

It turns out that 65% of all new functionality comes from me, with the remaining 35% coming from the customers. This pie chart represents delivered functionality too, so I guess it does prove that I do listen to my customers!

That said, the ultimate aim will be to equalise these figures a little more.

I suspect that the bias is down to my strong product vision. I have so many ideas that I want to incorporate and these tend to bias the story pipeline.

Finally – How happy are my customers?

This is a really difficult metric to determine.

The review comments and social media comments have been really positive and Sojour tends to be favourably compared with much larger and more expensive commercial offerings:

“Overall, Sojour Solo RPG VTT offers a level of functionality and ease of use that is unmatched by any other VTT platform.” – From a review on DriveThruRPG.

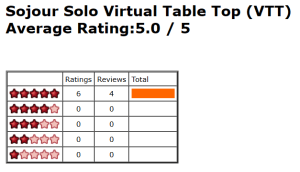

In the case of DriveThruRPG’s review scores, at the time of writing, there are 10 reviews in total, all of which have a straight 100% 5 out of 5 star rating:

Whilst good, 10 reviews is a tiny percentage of the overall customer base. As a result I don’t know how the other customers feel with regard to Sojour!

(If you are a customer, please let me know how you feel about Sojour! Tell me what you like or dislike about the product. This will help shape the future direction and journey that Sojour needs to take to be the best software that it can be!)

That’s it for this post – I hope it sheds some light on what’s going on behind the scenes!

Have Fun!

RobP